LIMITS

In this section the current limits of SQP software version will be summarized.

SQP was developed using the data from more than 250 Multitrait – Multimethod (MTMM) experiments carried out in the first 3 Rounds of the European Social Survey (ESS) and 87 experiments carried out by other research agencies (Saris and Gallhofer, 2007). These experiments resulted in a database of 3,726 survey questions for which the quality was known. Based on this database of questions, the MTMM quality estimates could be related to the formal and linguistic characteristics of the questions and a meta-analysis was developed, which allows the quality of new survey questions to be predicted. SQP is software used to obtain quality predictions of new survey questions (Saris and Gallhofer, 2014). The kind of survey questions for which SQP can provide a reliable prediction, is limited to the type of questions involved in the MTMM experiments. Here, these limits will be specified.

The SQP team expects most of these limits to be improved in a new version of SQP. In the meantime, please be aware of the limits and take them into account before reaching any conclusions.

SQP was developed using the data from more than 250 Multitrait – Multimethod (MTMM) experiments carried out in the first 3 Rounds of the European Social Survey (ESS) and 87 experiments carried out by other research agencies (Saris and Gallhofer, 2007). These experiments resulted in a database of 3,726 survey questions for which the quality was known. Based on this database of questions, the MTMM quality estimates could be related to the formal and linguistic characteristics of the questions and a meta-analysis was developed, which allows the quality of new survey questions to be predicted. SQP is software used to obtain quality predictions of new survey questions (Saris and Gallhofer, 2014). The kind of survey questions for which SQP can provide a reliable prediction, is limited to the type of questions involved in the MTMM experiments. Here, these limits will be specified.

The SQP team expects most of these limits to be improved in a new version of SQP. In the meantime, please be aware of the limits and take them into account before reaching any conclusions.

1 Countries for which one can obtain a prediction

SQP is able to provide quality predictions for the countries involved in the MTMM experiments, since these experiments are the ones used in the meta-analysis. The countries for which the current version of SQP can give a prediction are the following:

Austria, Belgium, Czech Republic, Denmark, Estonia, Finland, France, Germany, Great Britain, Greece, Ireland, Netherlands, Norway, Poland, Portugal, Slovakia, Slovenia, Spain, Sweden, Switzerland, Ukraine and United States.

The basic idea behind SQP is that the quality of a question can be predicted on the basis of the topic of the question, the formulation of the question, the response scale, the mode of data collection, etc. In the formulation of the question many linguistic characteristics have been included to cope with the language differences. However, these characteristics are not enough to cover all differences in quality across countries as estimated in the MTMM experiments. Therefore, a country variable was introduced to cover other effects related to the reaction patterns in the different countries. As a consequence, SQP in principle, cannot predict the quality of questions for countries that are not included in the above list.

In other words, from the MTMM estimates included in SQP, only the effect of the country can be identified directly with the selection of the country. The SQP quality predictions include the effect of the language through the effects of the linguistic characteristics. Thus, the SQP quality predictions are available for the above listed countries in combination with any language (see FAQ 2 for the distinction between MTMM estimates and SQP predictions). For example, let's suppose users want to study immigration in the Netherlands. For the Spanish immigrants they could use the Spanish language in combination with the Dutch country. This combination will cover the cultural effect of living in the Netherlands and the linguistic characteristics will capture the effect of the question's language.

It is important to note that the effect of the country variable is in general marginal. Therefore, if users would like to obtain a prediction of a question in a country which is not on the list, it is recommended that a country is chosen for the prediction that is culturally similar to the country of interest (see FAQ 5 for how to use a 'Prediction country'). In that case, the amount of uncertainty regarding the correctness of the predictions increases and this should also be taken into account. Thus, if possible, users should try using different prediction countries. For example, for a country such as Canada there are several countries that could be used, namely United States, Ireland and United Kingdom for those English-speaking questions, while France, Belgium and Switzerland, could be used for those French-speaking questions. The decision will depend on whether the prediction country is most similar in terms of cultural characteristics to the Canadian sample population. Users will not obtain a different prediction by simply choosing a different country in SQP. Users need to create and code the questions as many times as necessary to compare the results (see Limit 2).

Austria, Belgium, Czech Republic, Denmark, Estonia, Finland, France, Germany, Great Britain, Greece, Ireland, Netherlands, Norway, Poland, Portugal, Slovakia, Slovenia, Spain, Sweden, Switzerland, Ukraine and United States.

The basic idea behind SQP is that the quality of a question can be predicted on the basis of the topic of the question, the formulation of the question, the response scale, the mode of data collection, etc. In the formulation of the question many linguistic characteristics have been included to cope with the language differences. However, these characteristics are not enough to cover all differences in quality across countries as estimated in the MTMM experiments. Therefore, a country variable was introduced to cover other effects related to the reaction patterns in the different countries. As a consequence, SQP in principle, cannot predict the quality of questions for countries that are not included in the above list.

In other words, from the MTMM estimates included in SQP, only the effect of the country can be identified directly with the selection of the country. The SQP quality predictions include the effect of the language through the effects of the linguistic characteristics. Thus, the SQP quality predictions are available for the above listed countries in combination with any language (see FAQ 2 for the distinction between MTMM estimates and SQP predictions). For example, let's suppose users want to study immigration in the Netherlands. For the Spanish immigrants they could use the Spanish language in combination with the Dutch country. This combination will cover the cultural effect of living in the Netherlands and the linguistic characteristics will capture the effect of the question's language.

It is important to note that the effect of the country variable is in general marginal. Therefore, if users would like to obtain a prediction of a question in a country which is not on the list, it is recommended that a country is chosen for the prediction that is culturally similar to the country of interest (see FAQ 5 for how to use a 'Prediction country'). In that case, the amount of uncertainty regarding the correctness of the predictions increases and this should also be taken into account. Thus, if possible, users should try using different prediction countries. For example, for a country such as Canada there are several countries that could be used, namely United States, Ireland and United Kingdom for those English-speaking questions, while France, Belgium and Switzerland, could be used for those French-speaking questions. The decision will depend on whether the prediction country is most similar in terms of cultural characteristics to the Canadian sample population. Users will not obtain a different prediction by simply choosing a different country in SQP. Users need to create and code the questions as many times as necessary to compare the results (see Limit 2).

2 The 'Country' characteristic

As explained in Limit 1, the characteristic 'Country' of a survey question coded in SQP has an effect on the quality of this survey question. This means that having the same formal and linguistic characteristics of a question for different countries does not imply that the quality prediction of these would be equal, as the quality is affected by the country in question.

With the current SQP version, to detect the effect the country has on the quality, users need to code the characteristics of the questions separately for the different countries. Thus, if for example, a user codes the formal and linguistic characteristics of a question for a particular country (e.g. United Kingdom) a quality prediction for this particular country and characteristics will be given. However, if the user decides to identify the impact of having selected the United States and not the United Kingdom for instance, without any change in the formal and linguistic characteristics, the user should add the same question in SQP selecting, this time, the country United States and coding the same formal and linguistic characteristics. By editing the United Kingdom question details and changing the characteristic 'Country' to United States, SQP will not be able to recalculate the quality prediction.

In other words, if users would like to know the quality of the same question but in different countries, users need to add and code the characteristics of the question for each country.

With the current SQP version, to detect the effect the country has on the quality, users need to code the characteristics of the questions separately for the different countries. Thus, if for example, a user codes the formal and linguistic characteristics of a question for a particular country (e.g. United Kingdom) a quality prediction for this particular country and characteristics will be given. However, if the user decides to identify the impact of having selected the United States and not the United Kingdom for instance, without any change in the formal and linguistic characteristics, the user should add the same question in SQP selecting, this time, the country United States and coding the same formal and linguistic characteristics. By editing the United Kingdom question details and changing the characteristic 'Country' to United States, SQP will not be able to recalculate the quality prediction.

In other words, if users would like to know the quality of the same question but in different countries, users need to add and code the characteristics of the question for each country.

3 Topics of the questions

It is impossible to perform MTMM experiments for every question in survey research. Additionally, it is difficult to formulate alternative forms of some survey questions. Moreover, for background variables, memory effects could be present if different question alternatives were designed for the experiments. Background variables can also contain measurement errors as has been shown by Alwin (2007), using Quasi Simplex models, and Schröder (2014), using scaling procedures and latent variable models. Although the current version of SQP would not be a good source of quality information for these types of questions, quality information regarding background variables is available in Alwin (2007).

Combining the SQP predictions and the estimates of Alwin (2007) with respect to background variables, the estimates of a very large number of topics used in social sciences can be obtained and therefore the correlations between all variables can also be corrected from measurement errors.

Combining the SQP predictions and the estimates of Alwin (2007) with respect to background variables, the estimates of a very large number of topics used in social sciences can be obtained and therefore the correlations between all variables can also be corrected from measurement errors.

4 Absence of an 'Agree-Disagree' (AD) characteristic

The classic decomposition of the question-answering process has four components: 1) comprehension of the item, 2) retrieval of relevant information, 3) use of that information to make required judgments and 4) selection and reporting of an answer (Tourangeau, Rips and Rasinski, 2000). However, many authors state that 'Agree-Disagree' (AD) questions require an extra effort by respondents to answer the question (e.g. Carpenter and Just, 1975; Clark and Clark, 1977; Trabasso, Rollins, and Shaughnessy, 1971). The response process would be composed in the following way: (1) the respondents must read the stem and understand its literal meaning; (2) they must look deeper into the question to discern the underlying dimension of interest to the researcher; (3) having identified this dimension, respondents must then place themselves on the scale of interest; then, (4) they must translate this judgement into the AD response options appropriately, depending upon the valence of the stem. Although Saris et al. (2010) have shown that the distinction between AD and 'Item-Specific' (IS) requests is a very important one, meaning that the quality is affected greatly depending whether an AD or an IS request is used, the current version of SQP does not distinguish explicitly between 'Agree-Disagree' (AD) requests and other requests.

Furthermore, Revilla, Saris and Krosnick (2013) have also shown that for AD scales the quality decreases when the number of scale points increases, whereas in general the quality increases with the number of points. For SQP users, this means that:

For instance, if a 5-point AD scale is evaluated and the 'Potential improvements' tool suggests that increasing the number of points to 11 would improve the quality, then users should not do that, users should stick to the 5-points (Revilla, Saris and Krosnick, 2013) or even better, switch to an IS scale (Saris et al., 2010).

In addition, whenever users are interested in evaluating such questions, as the one presented below, it is recommend that they code them as 'Complex concepts'.

Indeed, for the question above, while the concept 'Importance' is a simple concept, the use of AD as the format of the question increases the complexity of the concept being evaluated, which in this case would be: 'An agreement regarding the importance of income for well being', a complex concept. Users should identify such questions using the option 'Complex concepts â Other' in the 'Concept' characteristic.

Furthermore, Revilla, Saris and Krosnick (2013) have also shown that for AD scales the quality decreases when the number of scale points increases, whereas in general the quality increases with the number of points. For SQP users, this means that:

SQP probably tends to overestimate the quality of questions using an AD request.

Users should not trust the predicted effect of the number of scale points on the quality for AD requests.

For instance, if a 5-point AD scale is evaluated and the 'Potential improvements' tool suggests that increasing the number of points to 11 would improve the quality, then users should not do that, users should stick to the 5-points (Revilla, Saris and Krosnick, 2013) or even better, switch to an IS scale (Saris et al., 2010).

In addition, whenever users are interested in evaluating such questions, as the one presented below, it is recommend that they code them as 'Complex concepts'.

Request for an answer: Please rate if you agree or disagree with the following statement: a high income is important for well-being.

Answer options:

1. Strongly disagree

2. Disagree

3. Neither disagree nor agree

4. Agree

5. Strongly agree

Indeed, for the question above, while the concept 'Importance' is a simple concept, the use of AD as the format of the question increases the complexity of the concept being evaluated, which in this case would be: 'An agreement regarding the importance of income for well being', a complex concept. Users should identify such questions using the option 'Complex concepts â Other' in the 'Concept' characteristic.

5 Modes of data collection

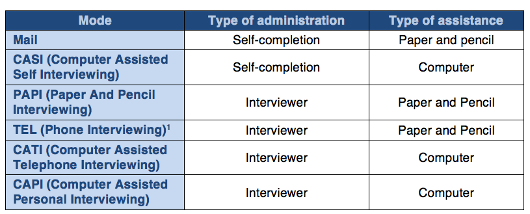

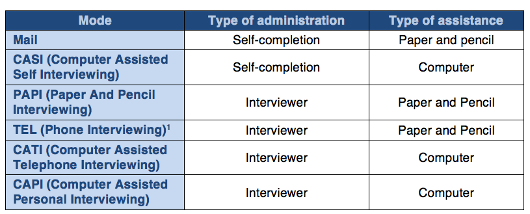

Although a variety of modes of data collection were used in the MTMM experiments on which SQP is based, not all of them are included. The data collection modes in the current version of SQP are identified as follows:

The modes of data collection that the current version of SQP takes into account, based on the MTMM experiments performed, are:

1Landline phones

1Landline phones

Web surveys are a special form of CASI. Therefore, users can use these characteristics to classify web surveys. Moreover, web surveys are now also completed using different devices such as smartphones and tablets. These devices may lead to different qualities. This point requires further research.

1. Type of administration: Interviewer administered or self completion

2. Type of assistance: Computer or paper and pencil assisted

The modes of data collection that the current version of SQP takes into account, based on the MTMM experiments performed, are:

Web surveys are a special form of CASI. Therefore, users can use these characteristics to classify web surveys. Moreover, web surveys are now also completed using different devices such as smartphones and tablets. These devices may lead to different qualities. This point requires further research.

6 Response scales

The current version of SQP can only provide predictions concerning questions with closed response options. Together with open response questions, new technologies allow all kinds of new forms of scales to be used: drag and drop, drop-down menu, etc. Such forms cannot be coded accurately as yet they have not been taken into account in the MTMM experiments and the SQP meta-analysis. Thus, predictions for most of these new forms are not available in the current version of SQP.

1. 'Magnitude estimation' and 'Line production'

Although many MTMM questions using 'Magnitude estimation' and 'Line production' response scales are available in the internal database, SQP is currently unable to provide a prediction for these types of questions. Therefore, if such characteristic is selected the prediction reported by SQP is -99.

7 Questionnaire layout

Most surveys involved in the MTMM experiments, which are now part of the SQP meta-analysis, were interviewer administrated except for the older CASI interviews of the telepanel. Therefore, in the current version of SQP, the layout on paper or screen has not been coded except for the showcards. Thus, the effect of the layout as studied, for example by Dillman (2000), has so far been ignored.

8 References

Alwin, D. F. (2007). Margins of error: A study of reliability in survey measurement. Hoboken, Wiley.

Carpenter, P. A., and Just, M. A. (1975). Sentence comprehension: A psycholinguistic processing model of verification. Psychological Review, 82, 45-73.

Clark, H. H., and Clark, E. V. (1977). Psychology and language. New York: Harcourt Brace.

Dillman, D. A. (2000). Mail and Internet Surveys: The Tailored Design Method. New York: John Wiley.

Revilla, M., Saris, W. E., and Krosnick, J.A. (2013). Choosing the number of categories in agree-disagree scales. Sociological Methods and Research February 2014 43: 73-97, first published online on December 10, 2013 doi:10.1177/0049124113509605

Saris, W. E. and Gallhofer, I. N. (2007). Design, evaluation and analysis of questionnaires for survey research. Hoboken, Wiley.

Saris, W. E. and Gallhofer, I. N. (2014). Design, evaluation and analysis of questionnaires for survey research. Second Edition. Hoboken, Wiley.

Saris, W. E., Revilla, M., Krosnick, J.A. and Shaeffer, E.M. (2010). Comparing Questions with Agree/Disagree Response Options to Questions with Construct-Specific Response Options. Survey Research Methods, 4(1): 61-79. Available at: https://ojs.ub.uni-konstanz.de/srm/article/view/2682

Schröder, H. (2014). Two methods to improve the measurement of education in comparative research. Amsterdam, Vrije Universiteit.

Tourangeau, Roger, Rips, Lance J., and Rasinski, Kenneth (2000). The Psychology of Survey Response. Cambridge: Cambridge University Press.

Trabasso, T., Rollins, H., and Shaughnessey, E. (1971). Storage and verification stages in processing concepts. Cognitive Psychology, 2, 239-289.

Carpenter, P. A., and Just, M. A. (1975). Sentence comprehension: A psycholinguistic processing model of verification. Psychological Review, 82, 45-73.

Clark, H. H., and Clark, E. V. (1977). Psychology and language. New York: Harcourt Brace.

Dillman, D. A. (2000). Mail and Internet Surveys: The Tailored Design Method. New York: John Wiley.

Revilla, M., Saris, W. E., and Krosnick, J.A. (2013). Choosing the number of categories in agree-disagree scales. Sociological Methods and Research February 2014 43: 73-97, first published online on December 10, 2013 doi:10.1177/0049124113509605

Saris, W. E. and Gallhofer, I. N. (2007). Design, evaluation and analysis of questionnaires for survey research. Hoboken, Wiley.

Saris, W. E. and Gallhofer, I. N. (2014). Design, evaluation and analysis of questionnaires for survey research. Second Edition. Hoboken, Wiley.

Saris, W. E., Revilla, M., Krosnick, J.A. and Shaeffer, E.M. (2010). Comparing Questions with Agree/Disagree Response Options to Questions with Construct-Specific Response Options. Survey Research Methods, 4(1): 61-79. Available at: https://ojs.ub.uni-konstanz.de/srm/article/view/2682

Schröder, H. (2014). Two methods to improve the measurement of education in comparative research. Amsterdam, Vrije Universiteit.

Tourangeau, Roger, Rips, Lance J., and Rasinski, Kenneth (2000). The Psychology of Survey Response. Cambridge: Cambridge University Press.

Trabasso, T., Rollins, H., and Shaughnessey, E. (1971). Storage and verification stages in processing concepts. Cognitive Psychology, 2, 239-289.